How do AI detectors work? Do they have secret mind-reading powers? Well... not quite. They are also not mediums (relaxed exhale). BUT they do use some clever tricks to guess if your text came from an AI writing tool or your own brain. These checkers scan your words for patterns that feel more machine-like than human.

But if it works, then let it do its job, right? Ehhh.... no. You really need to understand this idea of “how does an AI detector work?” Knowing how it gets the job done can help you write with more confidence and avoid unexpected false flags. So, let’s explore what’s going on behind the scenes — and how accurate these AI checkers are.

What Is an AI Detector?

An AI detector is a tool that checks text, images, or other content to spot signs that it was made by a generative AI tool. The goal is to flag materials that are AI-generated instead of human-created.

People rely on these tools for different reasons. For example, educators use AI writing detectors to check essays, publishers use them to protect original work, and hiring managers use them to check job applications or writing samples. Content creators and website owners make use of AI detectors too. Google has made it clear that low-quality, AI-generated content can hurt SEO rankings if it’s not original or useful.

And yeah — this matters. A lot. But why? Well, because when AI-generated content slips through, it can mess things up big time. Plagiarism gets a free pass. Fake info spreads like wildfire. Trust tanks — especially in schools, newsrooms, anywhere we rely on human writing and original work. That’s why AI detection is becoming part of the process when people just want to make sure content is original and honest.

Want to make your AI writing detection-resistant?

Try GPTinf today and see how easy it is to bypass even the toughest AI detectors — in seconds, no hassle.

Types of AI Detection Tools

There’s more than one way to detect AI. Here are a few common types you’ll see:

AI Writing Detectors

AI detectors (also known as AI writing detectors or AI content detectors) check the text for signs that it was written by AI tools or machine learning models. These tools are on the lookout for unusual patterns, repetition, or unnatural phrasing that human-authored writing usually doesn’t have (we'll explain more about this in the next section). Students, bloggers, and businesses often rely on AI detectors to check essays, articles, or website copy.

AI Image and Video Detectors

AI text isn’t the only thing. Generative models can also create realistic photos and videos (even faces that don’t exist). AI image and video checkers help spot deepfakes or visuals that were never shot by a real camera. These tools look for digital fingerprints. Little clues hidden in pixels. This is key for publishers, journalists, and social platforms trying to stop fake content from spreading.

AI Audio Detectors

Oh — don’t forget voices. AI-generated audio is getting creepy good. So, some AI recognition solutions now listen for robotic voice patterns or weird tone shifts. Handy for podcasts, interviews, or anywhere a fake voice could fool people.

How AI Content Detectors Work: Content Detection Core Technologies

So, let's get the main point of why you are reading this article: how do AI checkers work to spot if your words came from you… or an AI-powered engine? Here’s the breakdown of the core method of AI detection — and why they work (as well as where they can sometimes slip up).

TL;DR Core Detection Methods Table

| Method | What It Checks For | How Is AI Detected | Where It Slips Up |

| Perplexity & Burstiness | Predictability & sentence variety | Human writing is messier, less predictable. | Polished human copy or trickier AI can fake it. |

| Probability Patterns | Are your word choices too “safe”? | AI sticks to top-choice words too much. | Heavily edited text created by AI can pass as human. |

| Stylometric Analysis | Your style fingerprint: tone, quirks, flow | Humans have unique style markers. | Short text or a generic style can confuse it. |

| Machine Learning Classifiers | Big-picture pattern matching | Trained on tons of examples, learns subtle clues | Needs constant updates to stay sharp. |

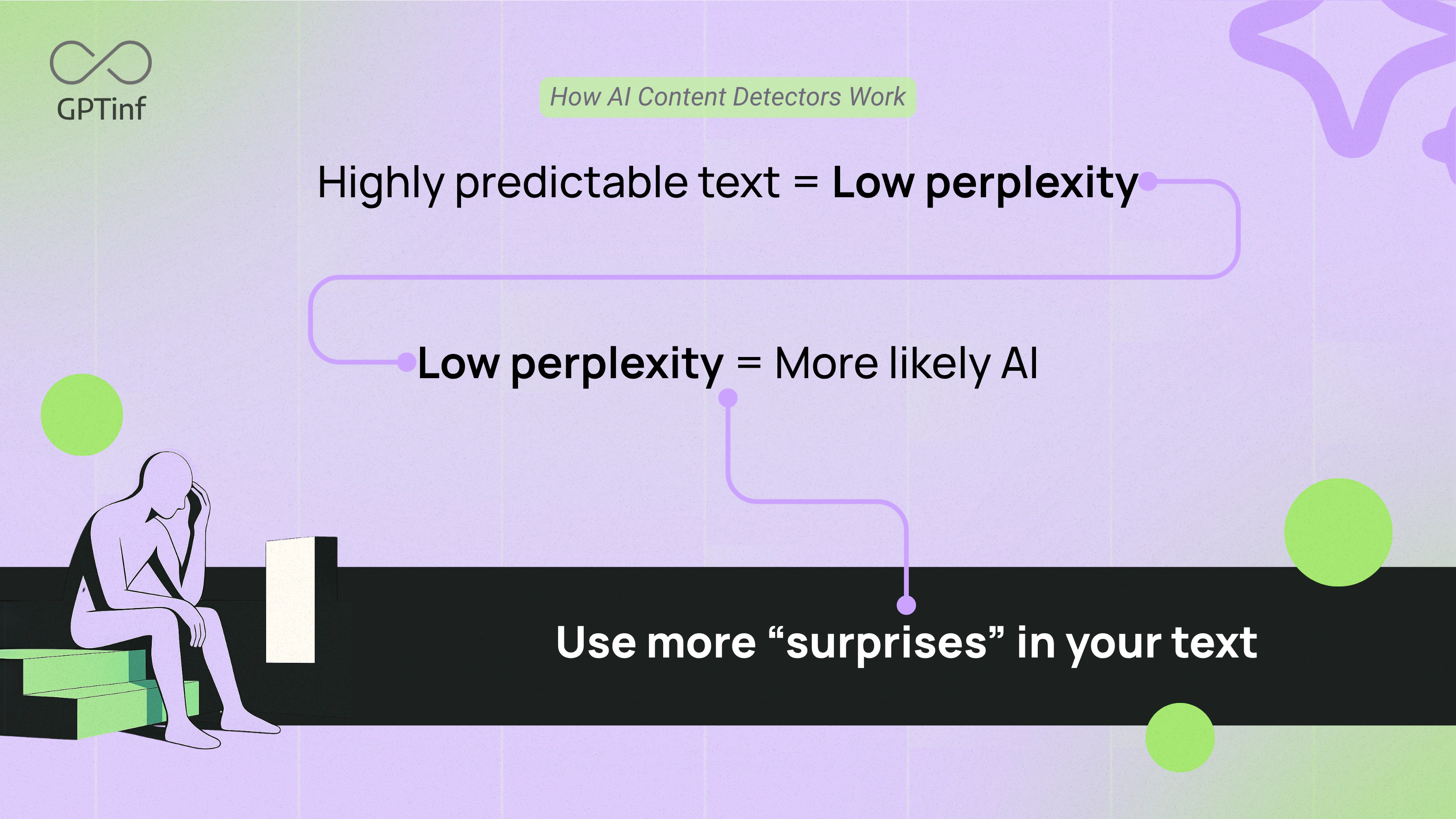

Perplexity & Burstiness

First up — perplexity. It’s a fancy word for how predictable your text is. AI-generated content is usually super predictable: same sentence structures, safe word choices, smooth flow, no weird surprises. But is genuine human writing different? Ohh, yesss... way messier and varied. We throw in odd phrases, switch tone, and break rhythm.

Then there’s burstiness. Humans mix short and long sentences, formal and casual bits. AI often stays… samey (every sentence feels cut from the same template). So if an AI checker sees low perplexity + low burstiness, it goes: hmm, maybe a bot wrote this.

Limitations:

- A skilled human writer using very formulaic or polished language might inadvertently produce text with low perplexity and low burstiness, triggering a false alarm.

- AI can fake randomness — adding randomness or varying its style to boost perplexity and burstiness.

- Alone, these clues can misfire. Best used with other checks.

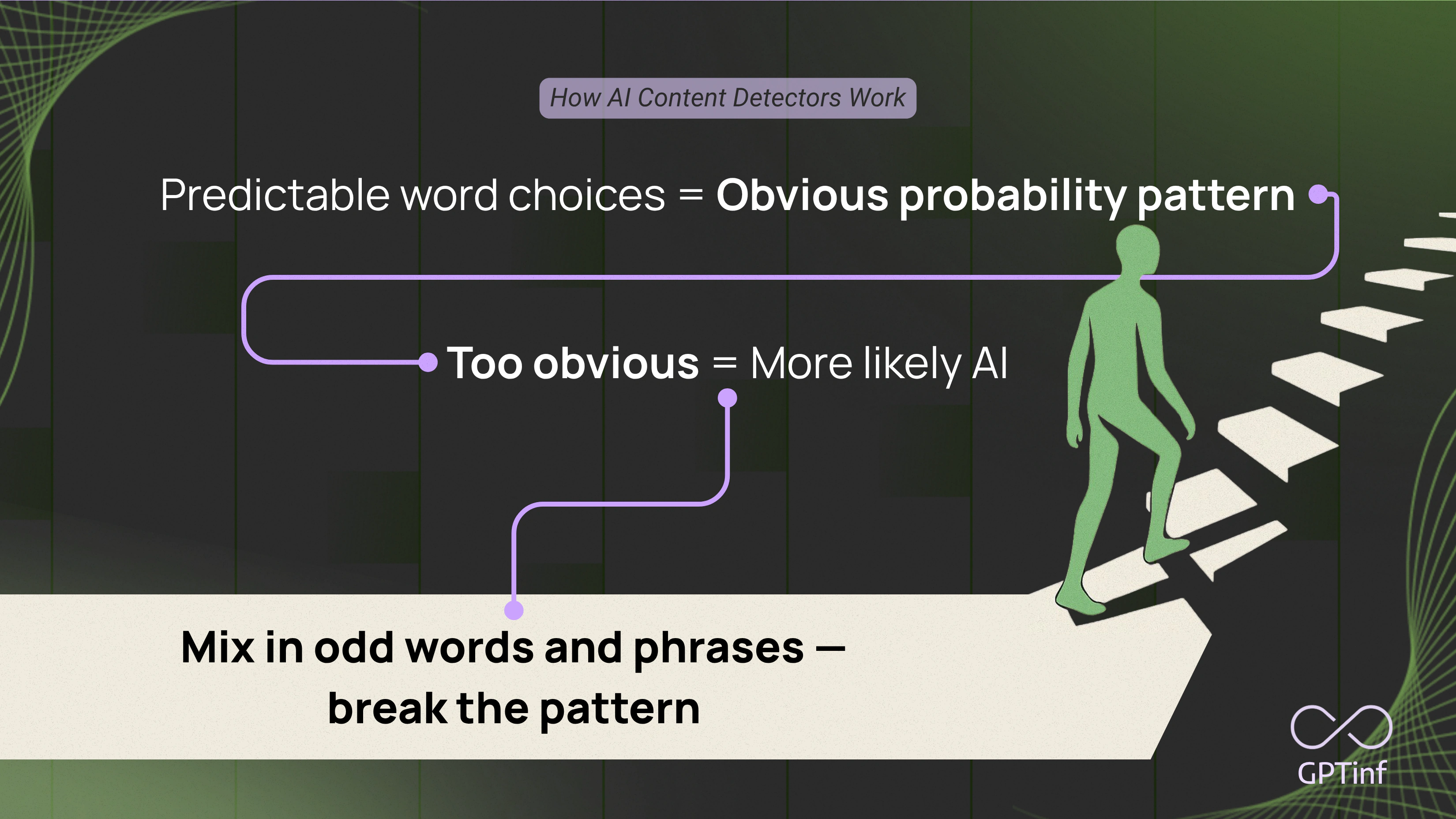

Probability Patterns

Another technique looks at probability patterns in the text — essentially asking: How likely is each word or phrase, given the context?

AI models tend to select words that are statistically very likely to come next, which can make content sound overly predictable. What about us? We get unpredictable. We may use an unusual word or an odd turn of phrase.

In practice, AI detectors analyze the text with a language model to see if the words used are the obvious ones an AI would choose, or something more eclectic

Limitations:

- Heavily edited AI text can pass for human.

- AI algorithms can purposely use some unconventional phrasing.

- Certain human-written materials (legal docs, basic reports) might naturally use very predictable language throughout (even though an AI writing bot didn't generate it).

- Performs best alongside other signals.

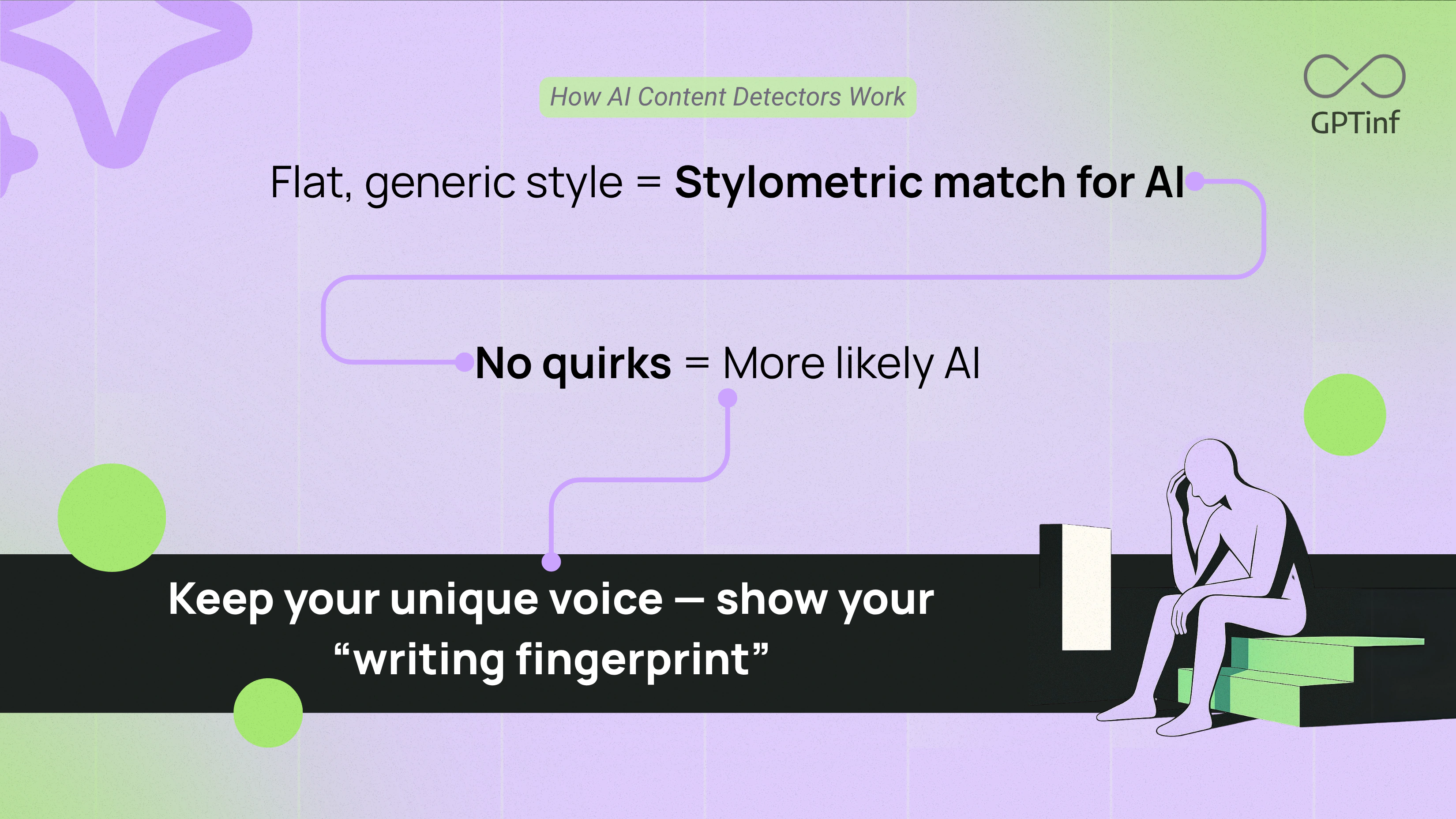

Stylometric Analysis

Every writer has a unique style — a kind of linguistic "fingerprint." Stylometric analysis uses that by examining the stylistic features of the text. Instead of focusing on word probabilities, this method looks at how the text is written. It checks things like sentence length, vocabulary, grammar quirks, and even the tone or level of formality — stuff we add without thinking.

AI writing tools like ChatGPT, Gemini, or Claude often sound flat or overly formal. Like: "The outcome was uncertain. Nevertheless, the attempt was made." Kinda stiff, right? The human version would be way more casual and natural: "I wasn’t sure what’d happen, but I gave it a shot."

Limitations:

- Modern AI models are getting better at mimicking the way people write (including injecting humor, varying sentence structure, or imitating a particular author’s voice).

- The accuracy of this method also improves with longer texts. The short one doesn’t give enough clues.

- Not all of us have a strongly distinctive writing style. Some authentic human copies are very generic or deliberately formal (can look “AI-like” under stylometric metrics).

- Proves more effective in tandem with other detection methods.

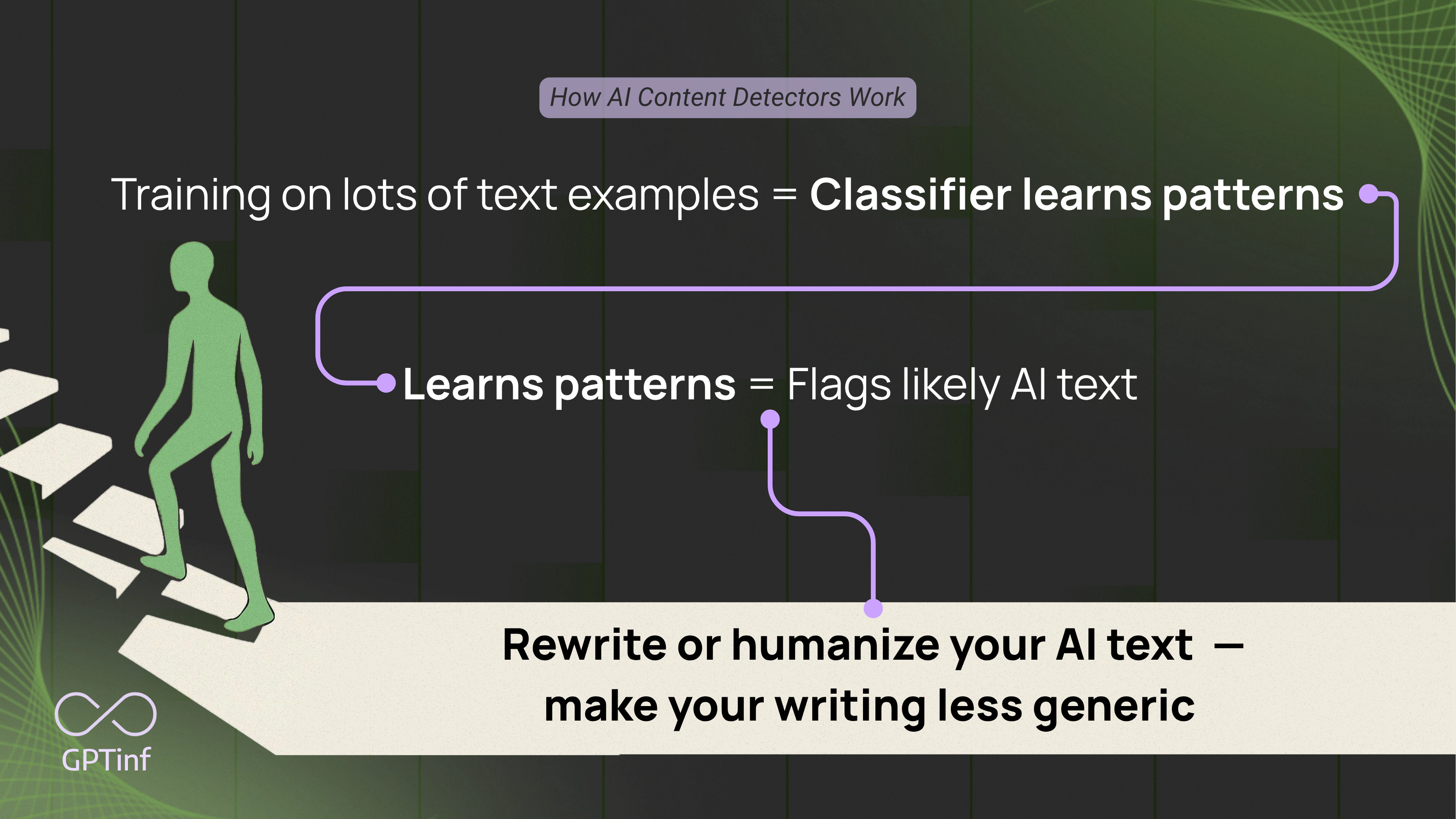

Machine Learning Classifiers

Finally — some detectors often use machine learning classifiers to wrap it all together. Basically, another AI learns to spot if AI was used. It trains on thousands of text examples: real human writing, AI-generated copy, and everything in between. Over time, it gets good at guessing what’s what.

What are the perks? A dedicated machine learning detector can weigh hundreds of features simultaneously and isn’t limited to a single clue or rule. It learns the aggregate differences. This makes it quite powerful. For instance, it could notice a combination of subtle cues that together give away an AI origin.

Cool? Sure. Perfect? Nope.

Limitations:

- The effectiveness of a classifier depends heavily on the quality and breadth of its training data. Old training = easy to fool (because the writing style has evolved).

- Require continual maintenance.

- Can’t always explain why it flagged your text.

- False positives happen — especially if you write super polished copy.

- Clever tweaking of AI-crafted content (such as paraphrasing or “humanizing” the output) can sometimes fool a classifier.

How Accurate Are AI Content Detection Tools?

So, how accurate are these AI detectors really? They might sound impressively accurate based on their marketing, but real-world performance often tells a different story. Many detector tools advertise detection rates in the 95–99% range. For example, GPTZero claims it is 99% accurate, and Copyleaks advertises 99.8% accuracy in its results. It creates the impression that they rarely fail.

In practice, independent evaluations have found these systems to be much less reliable. For example, one academic study testing a dozen detectors concluded they were "neither accurate nor reliable." Additionally, a team of University of Maryland students similarly reported that such tools would even flag some human-written text as AI and could be easily circumvented by humanizing and paraphrasing.

In other words, despite bold vendor claims, when you use AI detectors, remember they’re giving you a guess, not a fact carved in stone.

A big limitation is the trade-off between catching AI content and avoiding false alarms. Any tool can achieve close to 100% AI catch-rate if it’s overly aggressive. But that would mean incorrectly labeling lots of non-AI text as AI. And that's just YIKES.

Sensible systems try to avoid those false positives, yet doing so inevitably lets more AI-crafted text slip through undetected. For instance, Turnitin’s own AI checker is intentionally tuned to miss roughly 15% of AI-generated text to keep false accusations below about 1%. On the flip side, some third-party tools boast “unmatched accuracy” but often omit how often they mislabel normal writing.

Overall, research and classroom experience suggest that AI detectors are far from foolproof, prone to both false negatives (missing AI content) and false positives (mistakenly tagging genuine writing) under real-world conditions.

Challenges in Detecting AI Generated Content

Even the best AI writing detectors face roadblocks. Here’s how some common challenges stack up:

| Challenge | What Happens | Why It Works |

| Adversarial Prompting | People use advanced AI prompts to rewrite or tweak text. | Makes it harder for detectors to identify AI-generated content. A polished rewrite can slip past. |

| Humanized or Paraphrased AI Output | Users run AI-generated content through an AI humanizer tool or just edit it manually. | Changes the signals that AI detectors rely on. The text looks more like human writing. |

| Multilingual Detection | AI language models generate text in many languages — but most AI checkers were trained on English. | Creates blind spots. The detector may miss AI clues or unfairly flag human work. |

False Positives and Ethical Concerns

Here’s the part that makes people nervous: AI content detectors and plagiarism checkers can flag real human-produced output by mistake. Imagine you write a brilliant essay — totally your own — and a checker says your student work is AI-generated. Awkward, right? It happens. False positives can hit students, freelancers, job seekers, and anyone whose way with words feels “too perfect” or formulaic. Even if a detector claims to have only a 1% false positive rate, that still means potentially thousands of innocent essays or articles could be flagged given enough volume.

When schools or employers use AI detectors without context, innocent people can be accused. Some schools have policies on AI use, but the detectors can’t explain exactly why they think your text is AI-generated. And as a result, people might lose grades, jobs, or trust for no reason.

Plus, bias is real. Research shows non-native English writers face more false flags because their simpler style sometimes matches how AI writing tools tend to sound. That’s not fair.

So what’s smart? Don’t rely only on AI detectors. Use them as a clue — not the final word. Combine them with real context, conversation, and common sense.

How to Bypass AI Detectors

So, you know how AI content detectors work. But what if you want to avoid getting flagged in the first place? Good news: there are smart ways to do it. Here’s how people evade AI detectors (and why it works).

Rewriting AI Writing Manually

One option is simple (but time-consuming): rewrite your AI-generated text by hand. Add your own phrases, tweak sentences, mix up the style. Basically, you’re breaking the patterns that AI detectors rely on.

Want practical tips? Check out our full guide: Top 7 Proven Tips to Avoid AI Detection in Writing in 2025. Practical hacks, helpful examples, simple tweaks — all to help your writing pass as 100% you.

AI Humanizers

Don’t have time to rewrite every line yourself? Yeah — who does. This is where AI humanizers come in handy. These tools automatically rewrite AI-generated content to look like it was written by a real person. They tweak perplexity, burstiness, and style — exactly the things AI content detectors look for.

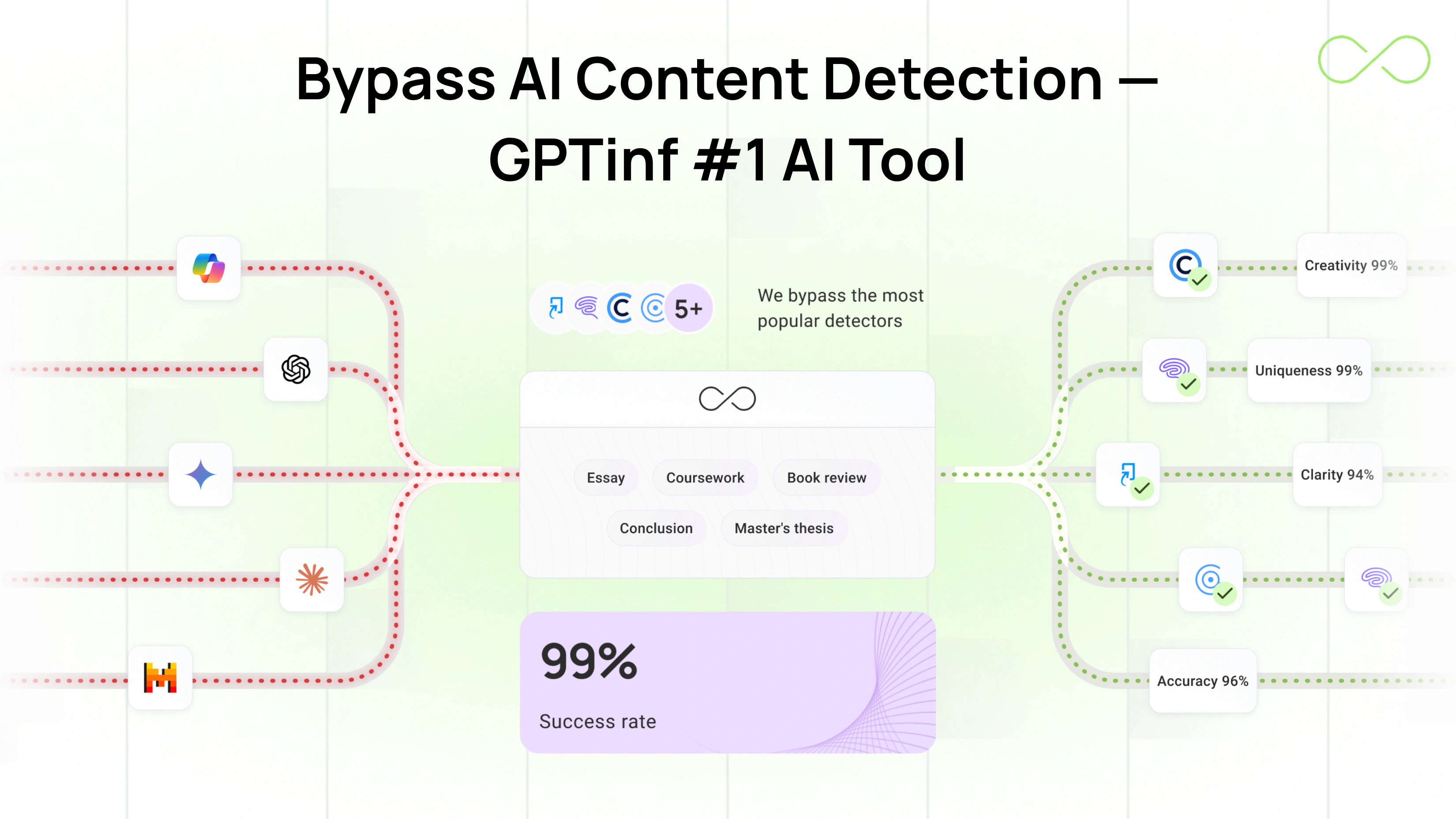

And here’s the deal: not all humanizers function the same. Some just paraphrase. Some are just powered by one AI to rewrite another AI. But a strong AI humanizer tool (like GPTinf) goes deeper.

GPTinf uses a custom, non-AI algorithm designed to make your text detection-resistant while keeping your meaning intact.

Why people like GPTinf:

- Bypass AI detectors — not just paraphrase (99% success rate).

- Has a built-in AI detector to verify your content actually beats all popular AI Detectors.

- Keeps your tone and intent intact.

- 8 rewrite styles: Standard, Simple, Academic, Shorten, Expand, Informal, Formal, Creative.

- Great for students, freelancers, and professionals who use generative AI.

- Quick results — human-like output in seconds.

- Supports “freeze list” to protect keywords or phrases you don’t want changed.

- Works on all kinds of AI-generated content — essays, blog posts, ads, you name it.

- "Compare Mode" to view original and humanized text side-by-side.

- Free trial to test the tool & competitive pricing.

Try GPTinf free today and see how fast you can bypass even the toughest AI detectors with clean, natural text

Manually Beating AI detectors vs AI Text Humanizers

So, which method handles the task better — rewriting machine-produced text by hand or using an AI humanizer to rewrite it? Here’s a quick look:

| Category | Manual Rewriting | Using Humanizers |

| Effort Needed | High (takes time, focus, and strong writing skills). | Low (fast and easy, the tool does the heavy lifting). |

| Consistency | Results vary (depends on how well you rewrite). | More consistent (reliable tweaks based on tested patterns). |

| Risk of Errors | Possible if you miss subtle AI writing signals or keep text too similar. | Less likely — best tools (like GPTinf) handle detection clues for you. |

| Best For | Writers who want total control and don’t mind editing line by line. Plus, you can do this after using AI humanizer to make the text even more natural. | Students, freelancers, or anyone who needs quick, human-like text that really beats AI detectors. |

Not sure which AI humanizer to pick? Here’s a detailed guide that breaks down the best options for humanizing AI text in 2025 — so you can choose the best fit for your needs.

Improving AI Checkers: What’s Next?

So, what’s coming next for AI writing detectors? Lots of researchers are working to make sure AI detectors actually keep up as AI technologies get more advanced. Right now, the signals AI detectors use won’t cut it forever.

New ideas focus on cross-model detection. This means training detectors to spot AI writing from many different AI content generators, not just the ones they were built for. That helps when new free AI models appear overnight.

Another area is hybrid moderation. Instead of relying on AI alone, schools and publishers are blending human and AI checks. Humans are better at context — they can tell if a line was generated by AI or just naturally polished. Pair that with a smart detector, and it’s harder for fake or lazy edits to slip by.

Better detection will also help handle AI and human-written text in many languages, not just English. New tools aim to detect AI content in translated work, mixed human and AI articles, and even tricky cases like AI-generated content in student work.

And one more piece? Educating people to learn how AI content detectors are used. When you know how AI content detectors actually work to spot AI, you’re less likely to panic if a detector might flag your essay. Or you can test your edits.

Conclusion: How Does AI Text Detection Work & Its Accuracy

AI content detectors are tools that scan your text for clues that it was generated by AI. They use patterns like perplexity, burstiness, probability checks, and stylometric signals. Many detectors use machine learning too, trained on huge piles of human and AI text to guess if your work came from you or a generative AI tool.

But remember: no detector is perfect. Some AI detectors identify AI-generated content well, but smart tweaks, paraphrasing, humanizing, or translation can fool them. And they do get it wrong — false positives happen, which can hurt students or writers if people rely on AI detectors alone.

To stay safe? Learn how AI content detectors are used. Know when to rewrite, when to test your text, and when to use a strong AI humanizer like GPTinf if you want to bypass AI detectors with clean, natural text.